Term of the Moment

3DOS

Redirected from: AI future

Definition: AI anxiety

(Artificial Intelligence anxiety) The fear that AI will replace people and jobs in the future. This form of automation anxiety dates back to the late 1700s when mechanical looms replaced workers in the textile industry (see Jacquard loom). Today, people fear AI will take away jobs as it replicates human functions and decision making. However, not all professions are susceptible (see ai-proof jobs), and many people believe we are entering a far better age of intelligence and capability (see superagency).

According to an AI Expert

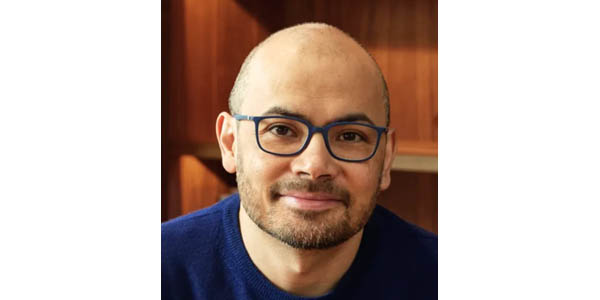

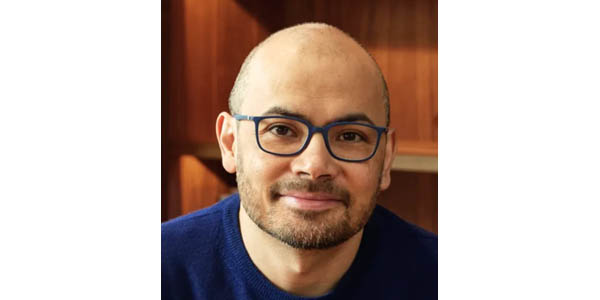

In 2025, Dario Amodei of Anthropic, a major AI company, told the Axios news site that the technology he and other companies are building today could eliminate half of all entry-level white-collar jobs in the next couple of years. Amodei admitted that it "sounds crazy and people just don't believe it!" Amodei is no lightweight. In 2025, he was one of the Time 100, Time Magazine's hundred most influential people in the world. See Anthropic and AI programming.

Biological Weapons

AI is increasingly enabling us to understand biology at a much deeper level. As a result, at the 2024 inaugural event for the Nuclear Threat Initiative (NTI) in Washington, D.C., former Google CEO and chairman Eric Schmidt expressed concern that bad people might use AI to develop new viruses that are undetectable. Such viruses could be used in biological warfare that could wipe out entire populations.

How AI Destroys Institutions

In December 2025, Boston University law professors, Woodrow Hartzog and Jessica Silbey wrote an article entitled "How AI Destroys Institutions," and the following is the opening paragraph: "If you wanted to create a tool that would enable the destruction of institutions that prop up democratic life, you could not do better than artificial intelligence. Authoritarian leaders and technology oligarchs are deploying AI systems to hollow out public institutions with an astonishing alacrity. Institutions that structure public governance, rule of law, education, healthcare, journalism, and families are all on the chopping block to be 'optimized' by AI."

An Existential Anxiety

More than just jobs disappearing, many people fear that AI is a threat to humanity. Many celebrities, including Bill Gates, Elon Musk and Stephen Hawking have all expressed concern that society might be irreparably damaged as AI-based systems increasingly replace human judgment. One hopes the decision to launch a war would never be made by an algorithm. However, under the rationale of "eliminating human emotion," this might eventually seem plausible.

The More Immediate Problem

In the meantime, military drones with increasing amounts of autonomy are being developed in the U.S., China, Russia and other countries. It is conceivable that if both sides in a conflict use this type of weaponry, it could get out of control and cause much more destruction than originally intended. This is the scenario that many are increasingly worried about (see smart weapon). Contrast with superagency. See AI in a nutshell, GPT, technology singularity, robot and AI agent.

AI May Be Harming Itself

The entire programming paradigm for AI is getting very complex, perhaps too much so for mere mortals. The programming maze we are creating may become so hard to understand in the future that it will cause its downfall. Stay tuned!

A Remarkable Observation

Sooner Than You Think

A Comment on Regulation

Bad Actors

Anxiety in the 1940s

A Warning

According to an AI Expert

In 2025, Dario Amodei of Anthropic, a major AI company, told the Axios news site that the technology he and other companies are building today could eliminate half of all entry-level white-collar jobs in the next couple of years. Amodei admitted that it "sounds crazy and people just don't believe it!" Amodei is no lightweight. In 2025, he was one of the Time 100, Time Magazine's hundred most influential people in the world. See Anthropic and AI programming.

Biological Weapons

AI is increasingly enabling us to understand biology at a much deeper level. As a result, at the 2024 inaugural event for the Nuclear Threat Initiative (NTI) in Washington, D.C., former Google CEO and chairman Eric Schmidt expressed concern that bad people might use AI to develop new viruses that are undetectable. Such viruses could be used in biological warfare that could wipe out entire populations.

How AI Destroys Institutions

In December 2025, Boston University law professors, Woodrow Hartzog and Jessica Silbey wrote an article entitled "How AI Destroys Institutions," and the following is the opening paragraph: "If you wanted to create a tool that would enable the destruction of institutions that prop up democratic life, you could not do better than artificial intelligence. Authoritarian leaders and technology oligarchs are deploying AI systems to hollow out public institutions with an astonishing alacrity. Institutions that structure public governance, rule of law, education, healthcare, journalism, and families are all on the chopping block to be 'optimized' by AI."

An Existential Anxiety

More than just jobs disappearing, many people fear that AI is a threat to humanity. Many celebrities, including Bill Gates, Elon Musk and Stephen Hawking have all expressed concern that society might be irreparably damaged as AI-based systems increasingly replace human judgment. One hopes the decision to launch a war would never be made by an algorithm. However, under the rationale of "eliminating human emotion," this might eventually seem plausible.

The More Immediate Problem

In the meantime, military drones with increasing amounts of autonomy are being developed in the U.S., China, Russia and other countries. It is conceivable that if both sides in a conflict use this type of weaponry, it could get out of control and cause much more destruction than originally intended. This is the scenario that many are increasingly worried about (see smart weapon). Contrast with superagency. See AI in a nutshell, GPT, technology singularity, robot and AI agent.

AI May Be Harming Itself

The entire programming paradigm for AI is getting very complex, perhaps too much so for mere mortals. The programming maze we are creating may become so hard to understand in the future that it will cause its downfall. Stay tuned!

In the 2024 podcast "Our AI future is way worse than you think," best-selling author Yuval Noah Harari observed that as time goes on, instead of training an AI model with human-generated data, the output of previous AIs will be used. In other words, AI will be learning from itself (sounds like calamity written all over it!). A year later Harari said "we are about to conduct the biggest psychological experiment in human history on billions of human guinea pigs, and nobody can predict what the results will be."

Former chief business officer at Google X, Mo Gawdat said in Steven Bartlett's 2023 "Diary of a CEO" podcast that AI will become much more intelligent than humans in only a few months. Gawdat claimed that in a couple years, Bartlett will be interviewing a robot for his podcast, and in 10 years, we will be hiding from the machines. Needless to say, his comments caused controversy. See Google X.

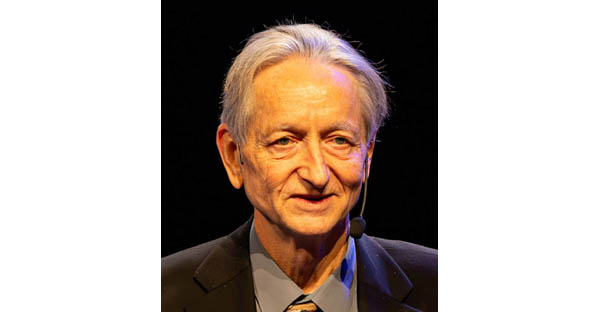

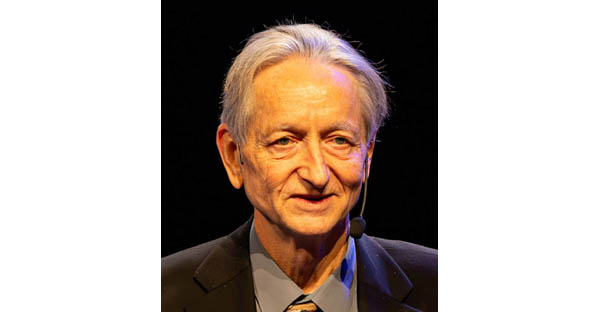

At the 2025 Ai4 conference in Las Vegas, Geoffrey Hinton, Nobel Prize Laureate known as the "Godfather of AI," said "AI systems can now generate misinformation at a scale and speed that outpaces any attempt to correct it. Hinton's comment about whether governments can regulate AI in time was "regulation tends to follow harm, not anticipate it. With AI, that lag could be catastrophic." See Ai4 conference.

Google DeepMind CEO and Nobel Prize winner Demis Hassabis, has warned on several occasions that the worst thing that can happen with AI is that it falls into the wrong hands. He is less worried about AI job loss than he is about bad actors gaining control of this advanced technology to do harm.

The above quote appeared in 1947 in an essay on social criticism entitled "Dialectic of Enlightenment" by German philosophers Max Horkheimer and Theodor W. Adorno. The quote was referenced in "The Loop" by Jacob Ward (see below).

In his 2022 book, science and tech journalist Jacob Ward says our innermost loop is the behavior we inherited, but the outermost loop is the way technology, capitalism, marketing and politics use AI to sample our behavior and reflect those patterns back at us. He claims the real threat is what happens as AI alters future behavior.