Term of the Moment

Base App

Definition: normal computing

(1) Regular computing; traditional computers; routine operations. See classical vs. quantum computing.

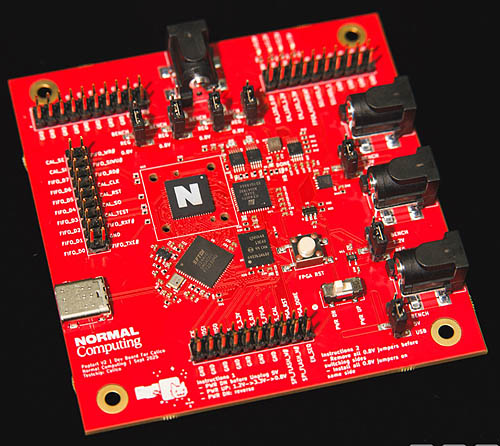

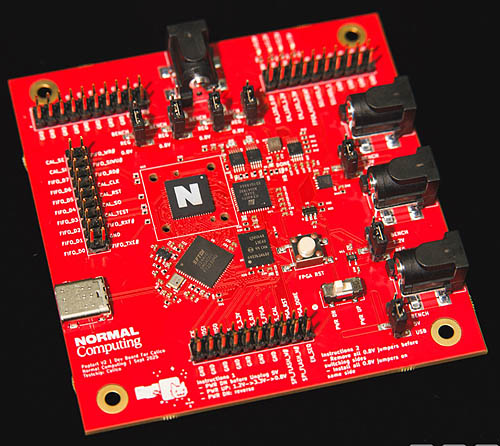

(2) (Normal Computing Corp., New York) Founded in 2022 by former Google Brain and Google X employees who helped pioneer AI for the physical world, Normal builds hardware to run AI workloads for image and video generation using a thousand times less power. It also creates the software products that accelerate the chip design process.

Image Generation and Diffusion

The primary way AI generates images is the diffusion method, which turns real images into random noise that is eventually stripped away. The noise creates the endless possibilities. Inspired by thermodynamics, Normal chips use the natural fluctuations of heat in the transistors to generate the random noise through their stochastic hardware design. Also called a "stochastic" chip, which means random. See AI diffusion.

For Inference Image Generation

The Normal chip is used for inference, not training. It supplies the randomness needed at image generation to enable multiple outcomes, otherwise every image would be the same.

The Image Generator

(2) (Normal Computing Corp., New York) Founded in 2022 by former Google Brain and Google X employees who helped pioneer AI for the physical world, Normal builds hardware to run AI workloads for image and video generation using a thousand times less power. It also creates the software products that accelerate the chip design process.

Image Generation and Diffusion

The primary way AI generates images is the diffusion method, which turns real images into random noise that is eventually stripped away. The noise creates the endless possibilities. Inspired by thermodynamics, Normal chips use the natural fluctuations of heat in the transistors to generate the random noise through their stochastic hardware design. Also called a "stochastic" chip, which means random. See AI diffusion.

For Inference Image Generation

The Normal chip is used for inference, not training. It supplies the randomness needed at image generation to enable multiple outcomes, otherwise every image would be the same.

In 2025, this Normal "thermodynamic" computer chip uses generated hardware-based fluctuations for randomness to create images. Obviously, the company hopes this revolutionary chip will become "Normal." See stochastic computing and AI datacenter.