Term of the Moment

files vs. folders

Redirected from: AI reasoning engine

Definition: inference engine

The part of an AI system that generates answers. An inference engine comprises the hardware and software that provides analyses, makes predictions or generates unique content. In other words, the software people use when they ask ChatGPT, Grok or Gemini a question. For more details, see AI training vs. inference.

Human Rules Were the First AI

Years ago, the first AI systems relied on human rules, known as "expert systems," essentially the first inference engines. However, the capabilities of today's neural networks and GPT architectures are light years ahead of such systems. See expert system.

Disaggregated Inference

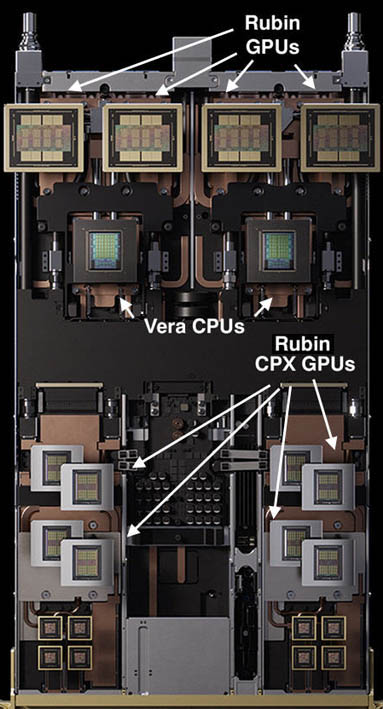

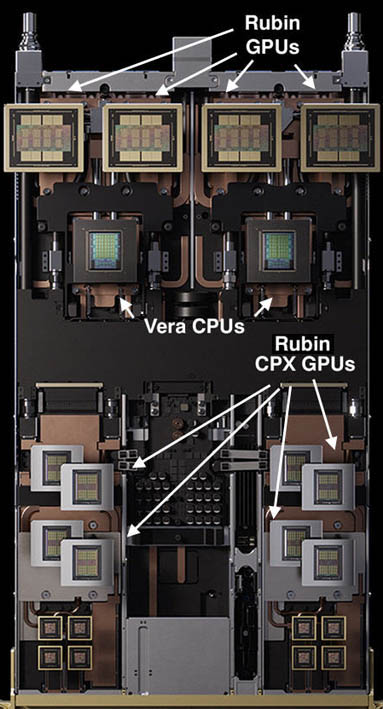

Disaggregated inference splits inference execution into two stages that each uses its own type of GPU. Context is the first phase, which requires a huge amount of processing to analyze the input. The second phase requires fast data transfer requiring high-speed memory bandwidth. By tailoring each phase, inference processing performance is increased dramatically. See long-horizon context.

A Disaggregated Inference Solution

Human Rules Were the First AI

Years ago, the first AI systems relied on human rules, known as "expert systems," essentially the first inference engines. However, the capabilities of today's neural networks and GPT architectures are light years ahead of such systems. See expert system.

Disaggregated Inference

Disaggregated inference splits inference execution into two stages that each uses its own type of GPU. Context is the first phase, which requires a huge amount of processing to analyze the input. The second phase requires fast data transfer requiring high-speed memory bandwidth. By tailoring each phase, inference processing performance is increased dramatically. See long-horizon context.

This NVIDIA Vera Rubin NVL144 CPX compute tray combines CPX context GPUs with Rubin GPUs and Vera CPUs. Along with switch trays, as many as 18 of these trays are installed in one server rack (see Vera Rubin). (Image courtesy of NVIDIA.)